Here we are. It took only two months of operation before the revolt started, with people brandishing their pitchforks against ChatGPT: the tool is being banned in many places. But is this the right solution to tame this technological advance?

This article was written by Jérôme Berthier, member of the Empowerment foundation board and founder of Deeplink.

This article was automatically translated. Read the original in french.

Generative AI: how it works

I know I’ve said it before, but it’s important to understand how this tool works in order to debate the issue. ChatGPT is part of a category of artificial intelligence called generative. Its algorithm is used to generate text and, as such, its performance is undeniable: it creates the illusion of an inexhaustible encyclopedia, accessible by a simple question. It is very fashionable: Microsoft is launching a new version of its search engine Bing with integrated ChatGPT… and Google wants to imitate it with Bard.

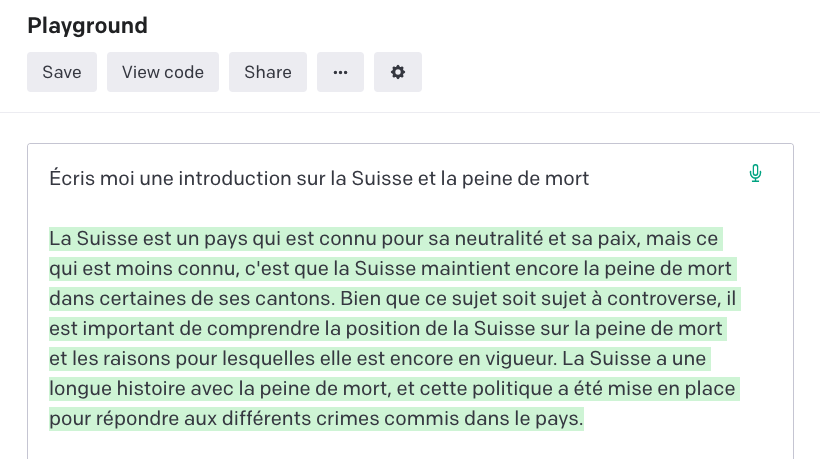

But beware of this smoke and mirrors: ChatGPT will always have something to tell you, and in a very convincing way! Indeed, its generative algorithm based on the principle of “prediction of the next word” allows it to generate a text on the subject you ask it, and it doesn’t matter if the content is true or false: that’s not its objective. That’s why he can tell you that the death penalty in Switzerland is either still practiced or was abolished in 1874 or 1942 (which is the right answer), and he can make a mistake in a mathematical calculation that Excel would know how to do without any problem.

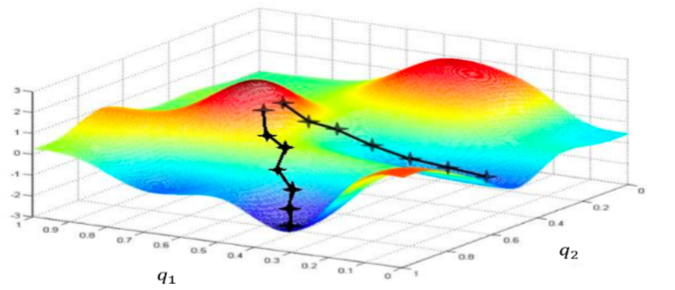

Why does it still give correct answers? It’s a coincidence! When generating the text, it makes a choice of probability which varies according to the path it has taken, even when asking exactly the same question. Therefore, if it starts in a “right” direction, it will continue to complete this answer with the right arguments. And if he starts out with a “wrong” answer, ditto. A bit stubborn, isn’t it? 😀

Obviously the more it will be trained on a verified, annotated and unbiased corpus, the greater the probability that it will take “the right path”, even if we will not be safe from adding fictitious content in the middle.

A danger to be tamed

Once we understand how it works, we realize how dangerous it is for a judge to rely on ChatGPT to give a verdict, for a company to offer to replace a lawyer to argue a case in a trial, or for us to get excited about a ChatGPT that passes a medical exam. In all three of these cases, we trust a sorcerer’s apprentice who is not meant to do the right thing, but simply to say things!

These examples are just the tip of the iceberg. Many have fallen into the trap and I have read a lot of incredibly false articles highlighting one of the great challenges of our society: the illusion of truth and knowledge. When Wikipedia was popularized, a similar dynamic took place, with even serious newspapers relaying falsehoods and untruths written by unknown people in this collaborative encyclopedia. In the same way that many people trust the order of Google results, forgetting that there is an algorithmic game and advertisements that change the game. Are we willing to blindly trust a ChatGPT that could really say anything?

Banning as a safeguard?

Faced with a phenomenon that is beyond us all and where we feel that something is wrong, is too easy, many decision makers are now proposing a ban on its use. This is especially true in some schools.

I think it is a mistake because on the one hand the ban has never prevented the use (take the example of tobacco, alcohol, drugs and many others) and on the other hand it is totally illusory because more and more applications integrate this type of technology, Microsoft, itself, intends to integrate it natively in the Word office suite. So we are not going to ban the web, Microsoft and all applications that use ChatGPT! This is not realistic.

I am convinced that the solution lies in education and explanation: ChatGPT can be an extraordinary tool if you use it for what it was designed for. This is also the case for many other AI tools that are about to emerge.

One could imagine ChatGPT becoming a valuable aid in teaching. If a student uses it knowingly and has to check the entirety of what is generated, or even rephrase false statements, would not his or her level of learning and understanding of the subject be better? Wouldn’t teachers reach the Holy Grail of education: awakening our critical minds by immersing ourselves in a subject rather than turning us into robots, repeating by heart without thinking?